Verified Security Tests Explained: How, and Why, to Move From TTPs to VSTs

TTP. It is potentially the most overloaded acronym in offensive security today - but what does it mean? While the term has been used for decades in the military, it reached fever-pitch only after the MITRE ATT&CK framework started taking off in 2016.

At its root, Tactics, Techniques, and Procedures are designed to encapsulate a specific behavior or effect an adversary may induce on a computer system. They could come in any language (bash, python, C,...), work against any system (Windows, MacOS, Linux,...), and be packaged in any format (executable, YML file, ...).

This many variables has made defining TTPs as elusive as the adversaries who implement them.

With so much variance, the cyber security industry as a whole has been scrambling to add clarity through the form of classification systems.

MITRE ATT&CK is the primary classification system for TTPs. But the framework only goes into detail on the Tactic and Technique layers. Procedures, with their infinite forms, are left off as they’re as difficult to wrap your hands around as a bloom filter.

Why bother classifying them?

TTPs in Operator

Here at Prelude, we have a history of TTP design and classification.

In November 2020, we released our first version of Operator, a command and control application designed around the concept of chained TTPs. The idea was simple but extraordinarily complex: chain individual TTPs together (output of one goes into the input of another) in order to build intelligent, automated attacks.

Before Operator, our founding members built MITRE CALDERA, the first open-source automated adversary emulation framework.

Operator TTPs are YML based and combine command blocks with the metadata to classify and describe them. Let's look at an example:

You can mentally separate the code from the not code portions of this TTP by splitting on the platforms object.

Inside platforms, each supported operating system is listed, along with a set of executors (programs on the OS that can run the command block).

Note that this example TTP includes a payload. A payload is an arbitrary code file that lives elsewhere that is intended to be downloaded when the command block executes on a target system. If you’re using Operator, it takes care of this downloading process for you.

Everything else in this TTP file is, for all intents and purposes, metadata.

TTPs in this format are great for repeatability but come with a few drawbacks:

- The separation of TTP commands and payloads require technology to manage. In the above case, Operator is the technology required. This makes it not so simple to grok/translate/transfer elsewhere.

- The command blocks enforce no standardization, making them prime for “cowboy coding”. This makes validating their safety difficult.

- The output of running the TTP is in stdout/stderr format, meaning we were relying on parsing arbitrary text to understand if a TTP was successful or not.

The biggest drawback however is observability: there is no way to determine where a TTP can be executed, to what level of success, and how safe it is to run.

In the example above, can this TTP run in bash and zsh or just sh? What version of sh? What version of Linux? How can I know for sure?

Side Note on Execution Engines

Payloads in general cause the most pitfalls in this TTP format (and many others).

Because payloads can be written and executed in so many different ways, the execution engine that runs the TTP requires a large amount of functionality, and even then, it's a rat race to keep up with new technologies used in command-and-control frameworks.

There are two critical parts of a TTP: the attack code itself - which is innumerable in variation and technology used - and the execution framework that can run the code. The latter being by-and-large an extension of the former.

Meet Detect

When we sat down to write Detect - a continuous security testing platform - we needed to revisit our structure for TTPs.

We needed them to:

- Provide rock-solid confidence on where they could run

- Apply client and server side checks to ensure safety

- Offer flexibility to run on non-IT operating systems

- Be ready to run at scale, on production endpoints

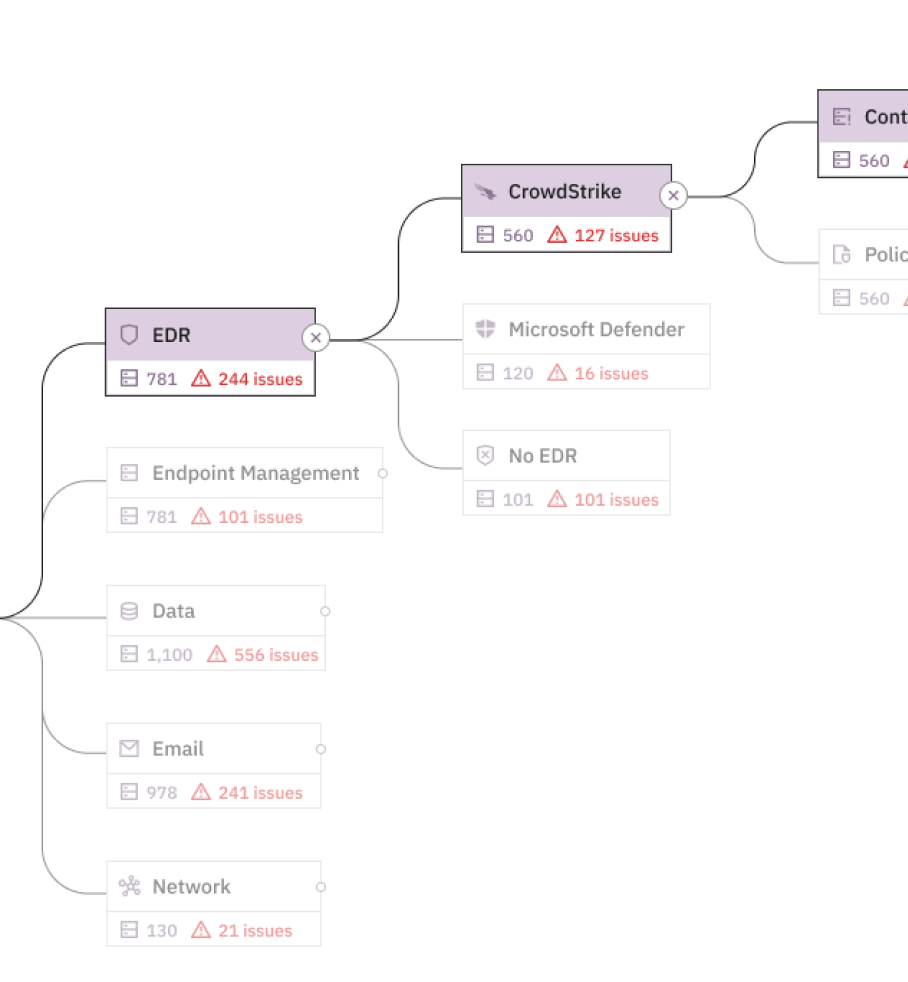

The way Detect works is you deploy probes on all your endpoints and start sending them tests to better understand the security posture of your infrastructure.

Any endpoint in your production environment - anything that runs code - should have a probe running.

These probes could be running on servers, laptops, embedded systems, OT devices, and pretty much anything else. Twice a day, or at whatever internals you choose, each probe connects to your central hub to get a set of security tests to run.

At this point we paused.

In order to run TTPs at scale in production we could no longer run TTPs in their current form. Their lack of structure, lack of safety, and their reliance on ‘cowboy coding’ made them inherently unsuitable for production use.

TTPs would need to account for the extreme variance in operating systems, as probes would take on the role of the execution engine.

Thinking through this problem logically, we needed to push our TTP format beyond the platform/executor nesting we used in Operator. We needed to account for specific architectures so we could write TTPs whether libc was available or not, specific binaries could be found on the system/LD path, and that we could produce value without relying on stdout/stderr.

These requirements represented the technical constraints for Detect “TTPs” to function at the scale and wide array of systems our probes were running on.

Beyond that, additional technical specifications had to be met to maintain integrity - and especially safety - considerations:

- TTPs needed to be signed, to ensure code from arbitrary sources wouldn’t be executed on endpoints.

- TTPs needed an organizational structure so that results could be tracked at a high volume without requiring a security engineer to decipher them.

Introducing Verified Security Tests

All of these requirements resulted in two core changes from the Operator structure:

- The code portions of the YML files needed separation from the metadata.

- Code logic needed structure to guarantee consistency from test-to-test.

Because these requirements were tied so tightly to the scale and safety specs talked about earlier, we decided to move away from the term TTP. The tests we were now writing would be validated first by our internal team and second by the community at large.

So we coined the term Verified Security Test (VST).

We then sat down to make the changes.

The Anatomy of a Verified Security Test

The non-code portions were easy. We trimmed down the required properties to just test ID, name, and owner (account_id). Here is an example of a VST (metadata):

Next, came the code files, which we started referring to as variants. Variants have a standardized naming format that looks like this:

ID_platform-architecture

For the health check test above, we could attach a variant for a macOS system running an M1 processor that has the following file name:

39de298a-911d-4a3b-aed4-1e8281010a9a_darwin-arm64

The Intel version would be:

39de298a-911d-4a3b-aed4-1e8281010a9a_darwin-x86_64

For a given VST, we decided you should be capable of attaching n-number of variants to cover different platforms and architectures.

Moving into language selection, we wanted to be less opinionated about the actual programming language but have strong opinions on the structure.

We settled on Go as the primary language for VSTs - but technically Detect will run a VST in any language that abides by the structure requirements.

Prelude hosts a GitHub repository of open-source VSTs for the public.

For structure, we decided each variant must contain:

- A test function, which executes the test code

- A clean function, which reverses any effects the test code may incur

- An entry point which calls test, if no arguments are sent in, or clean otherwise

Additionally, the test and clean functions must exit with a status code from a predefined list of options describing the granularity of what occurred.

Results of a Verified Security Test

This last part is of particular importance.

Remembering that a pitfall of TTPs is their dependence on a security engineer to contextualize the results, VSTs would need to have self-explanatory output. This is a another critical requirement for scale.

The following table gives test authors the ability to select granular conditions for what happened during code execution:

Each test and clean function is required to exit with one of these codes. The codes are then easily aggregated, providing you with intelligence around your security posture - whether you have 10 endpoints or 10,000.

This visual support helps outline the syntax of a VST

Scheduling your first Verified Security Test

Interested in using Detect? Click here to sign up for free:https://platform.preludesecurity.com. Take it for a spin by running your first VST: Will your computer quarantine a malicious Office document?

Start monitoring controls free for 14 days